Universal Translator

A Method for Building a Speech Translator App using Azure AI Services

Overview

This guide is an answer to a Microsoft challenge to create a speech translator app. It demonstrates how to build a speech translator in Power Apps using the Speech service from Azure AI Services. Recording, transcription, translation and read-aloud functionality will be covered.

Use-Case

Borrowing from the challenge:

Fabrikam is a fictitious multi-national company with several employees and offices around the world. Fabrikam host annual Employee thanksgiving event that results into gathering of over 100,000 employees across over 70 countries for a five (5) days celebration.

With the diversity of the participants, there are noticeable language barriers that often affect collaboration, communication, and networking. Fabrikam is looking forward to addressing this challenge in this year's event and they need you to build the solution.

In other words: the upcoming event is sure to be a boondoggle, but we need a translator to make sure everyone can participate.

Caveats

A few things to know before you go.

Product terminology. As of July 2023, Cognitive Services and Azure Applied AI Services became part of what is now known as Azure AI services. Some terms may vary if you read up on this area.

Premium licensese for REST APIs and connectors used. A combination of REST APIs calls and platform connectors are used. Premium subscription required.

Batch Speech-to-Text vs Speech-to-Text. Batch is pre-recorded audio. Speech-to-Text is real-time. We are using Batch today for short audio.

Limitations. Recordings may need to be kept short when using the REST API (either HTTP endpoint or OOtB connector). The SDK is the next level. See the limitations of this particular AI service here.

Microsoft Challenge. The impetus to build this app came from Leandro Carvalho, who posted the Microsoft Challenge to build a speech translator app using AI Services a few weeks back. This guide borrows Microsoft content from the modules and leverages the challenge use-case to build a helpful communication tool for a multi-national firm.

Potential cost. This may cost you some money if you do not have Azure credit or fun funds to burn. Have a look at the cost tools in the Azure portal to keep an eye on the charges, depending on your implementation. This can be accomplished using free tiers, though, with usage limits.

Do not reveal sensitive info. Consider using Azure Key Vault to store keys, secrets, and other sensitive info. This article does not include this aspect for simplicity, but architectural diagrams are available as well as guides on how to encrypt/decrypt sensitive info.

Watch your regions. Create and use resources within a single region to avoid those charges.

Audio file format issue. The Speech service requires WAV format. Using the HTTP endpoint to transcribe audio recorded in a Power App requires an Azure Function to convert the app MP3 audio to WAV format. The template flow from Archer Zhao at Microsoft uses the Batch Speech-to-Text connector with no issues.

If using an Azure Function presents no issue, have a look at this method.

A few moving parts with this one.

Prerequisites

Basic requirements.

Power Apps developer account

Power Automate developer account

Azure account.

Azure resources. Listed below.

Some chunky stuff.

Steps to Implement

Building a translator follows a general process using Azure and Power Platform.

We have our steps. Let’s begin.

Create resources in Azure.

Some setup required in Azure. We will need these resources:

Azure Blob Storage. For storing files - audio files, transcriptions, and their text reports.

Key Vault. For storing sensitive information (keys, etc.).

AI resources.

Choose one:

Multi-service AI resource. Creates an endpoint and key to access multiple AI services. One key, multiple services. May centralize resources, usage, and their cost.

Speech Service. Creates an endpoint and key to access. One key, one service.

Translator. Service to handle translation.

**Keep track of the access keys that are issued. Sensitive info, as they are the credential for using the endpoint.

Azure AI Services, namely its Speech Service, serves as the generative engine of this AI solution.

The Speech service provides various language services using a Speech resource. A number of high-power tools are available: voice transcription, voice creation, text to speech voices, translation of spoken audio, and speaker recognition.

Navigate to the Microsoft Azure portal and create the services and resources.

Here are the endpoints for each of the AI services the app will use:

Speech-to-Text service:

For live events, streaming, etc.

https://[region].stt.speech.microsoft.com/speech/recognition/conversation/cognitiveservices/v1

Batch Speech-to-Text:

For large files, saved audio content, etc.

Upload audio: https://[region].cris.ai/uploads

Start transcription: https://[region].cris.ai/transcriptions

Translator service

https://api.cognitive.microsofttranslator.com/translate?api-version=3.0&from=[source-language-code]&to=[target-language-code]

Text-to-Speech service

https://[region].tts.speech.microsoft.com/cognitiveservices/v1

These will be used later on. Naturally, replace anything in brackets with an actual value (region, to/from language codes, etc.).

Be sure to keep note of the keys that are created along with resource.

Create tables in Dataverse.

The challenge requires Dataverse custom tables to capture the conversation. Here are some Microsoft recommendations for storing the speech recognition and translation data in Dataverse tables.

SpeechSession. Stores metadata for each speech interaction.

SessionId (primary key)

UserId (lookup to user table)

LanguageSpoken (language code)

LanguageTranslatedTo (language code)

CreatedDateTime

SpeechUtterance. Stores each utterance in a session.

UtteranceId (primary key)

SessionId (foreign key to SpeechSession)

UtteranceText (recognized speech text)

UtteranceTranslation (translated text)

UtteranceOrder

SpeechModel. To track Speech API model used.

SpeechSessionId

ModelId

LanguageCode

CreatedDateTime

A one-to-many relationship between SpeechSession and SpeechUtterance tables is suggested.

Create a relationship in Dataverse.

To confirm the relationship is set and active, we can check the Relationships via the table overview.

Entity Relation Diagram Creator in XrmToolBox is another option.

Any way you like so long as the relationship is functional.

Create a canvas app in Power Apps.

Build your canvas app as you would like it. This is a speech translator, so the app should include the features of a similar utility.

Convert user’s speech to text.

Translate the text to the desired language.

Speak out the translated text using the Speech Service API.

Support multiple languages and dialects.

A quick one to get things working:

For the final version, read on.

Build flows in Power Automate.

Instant flows integrate the app with the Speech service. The orchestrator provides the link to Speech APIs using the Batch Speech to Text and HTTP connectors.

A few flows run the show. They could probably be condensed, but the audio bit is available on demand (via button click), as a user may only need a text translation.

Flow 1: Transcribe and translate audio.

This flow kicks off the entire process. It borrows from the work of Archer Zhao, whose work can be viewed here. The template flow that he uses in the video can be found in the Power Automate template gallery.

The template flow uses the Batch Speech-to-Text connector (which is a ready-made REST API) and passes the transcribed text to the Translator API to translate text into another language.

A few adjustments were made to the flow to accommodate our use-case. Only these will be covered, as the rest of the flow is unchanged.

Optional File Format Condition

The condition block has been removed, as dedicated storage is assumed. Feel free to keep this part.

The method is easy to implement - only the display name of the object needs to be changed.

Trigger Changed from Create/Edit Trigger to Instant

In the template, the flow trigger is automatically kicked off when an item is created or modified in storage. The transcribed/translate flow works as an instant flow, not automated.

The AzureBlobStorage connector first saves the file to storage and the flow uses the path once returned for the subsequent action.

Blob path can come from the app via Ask in PowerApps or a variable can be initialized and set earlier then employed here.

We now have a valid URL and access token to the blob; it can be used in subsequent actions via the Dynamic Content menu.

Results Sent to Translator API Post-Transcription

The most significant changes can be found deep in Archer’s flow.

Find case #2 in the condition for the Switch action (you need to dig). We can pass the transcription file contents to the Translator API to generate a translation.

The translation actions happen after the Apply to each recognition phrase step.

Use the body from the Parse json - Result action for the Body in the HTTP action.

[

{

"text": body('Parse_JSON_-_result')?['combinedRecognizedPhrases'][0]?['display']

}

]

The translation is then tacked onto an array crafted via Compose action.

These “two worlds” can be combined via expression.

/*Transcription*/

body('Parse_JSON_-_Parse_result')?['combinedRecognizedPhrases'][0]['display']

/*Translation*/

outputs('Parse_JSON_-_Return_translation_from_Translator_endpoint')?['body'][0]?['translations'][0]?['text']

The output is summarily returned to the app using the Response action.

Note the Response Body JSON Schema. Optional, but this will validate what is returned to the app.

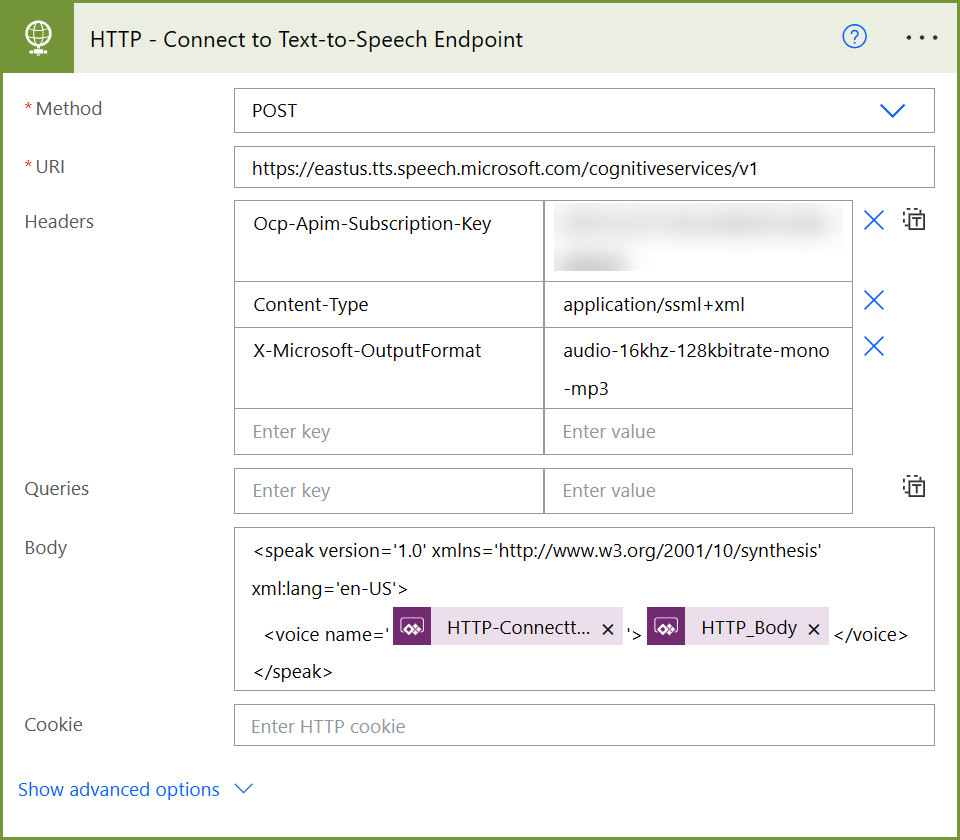

Flow 2: Create audio translation.

If the user wants/needs to listen to the audio, a trigger creates an audio file with an AI voice and returns the hyperlink for playback in the app. This flow uses the Batch Speech-to-Text API.

Create an HTTP action using SSML markup.

<speak version='1.0' xmlns='http://www.w3.org/2001/10/synthesis' xml:lang='en-US'> <voice name='en-US-Guy24kRUS'>Your translated text here.</voice> </speak>

Instead of using en-US-Guy24kRUS as the voice name value, select Ask in PowerApps in the Dynamic Content window. A drop-down in the app contains the voices available to the user. The flow will use that input.

Do the same for content with the voice tags - select Ask in PowerApps, as a button-click will send the text to be voiced.

Once the file is created in storage:

An access token is generated:

So, the audio URL is accessible once passed back to the app:

And then, there was sound.

Flow 3: Get AI voice list

The service provides over 400+ AI voices for reading translations aloud. A simple HTTP action returns the list, which provides the user the ability to select the voice.

Subsequent actions will conform the data and return a clean collection to the app.

I fetch this list early on when the app starts.

Set(vAIVoiceListOutput, GetAIVoices.Run());

ClearCollect(colVoiceList, vAIVoiceListOutput);

Once these items are all set, we can see the integration between the service and app by providing audio samples via UI.

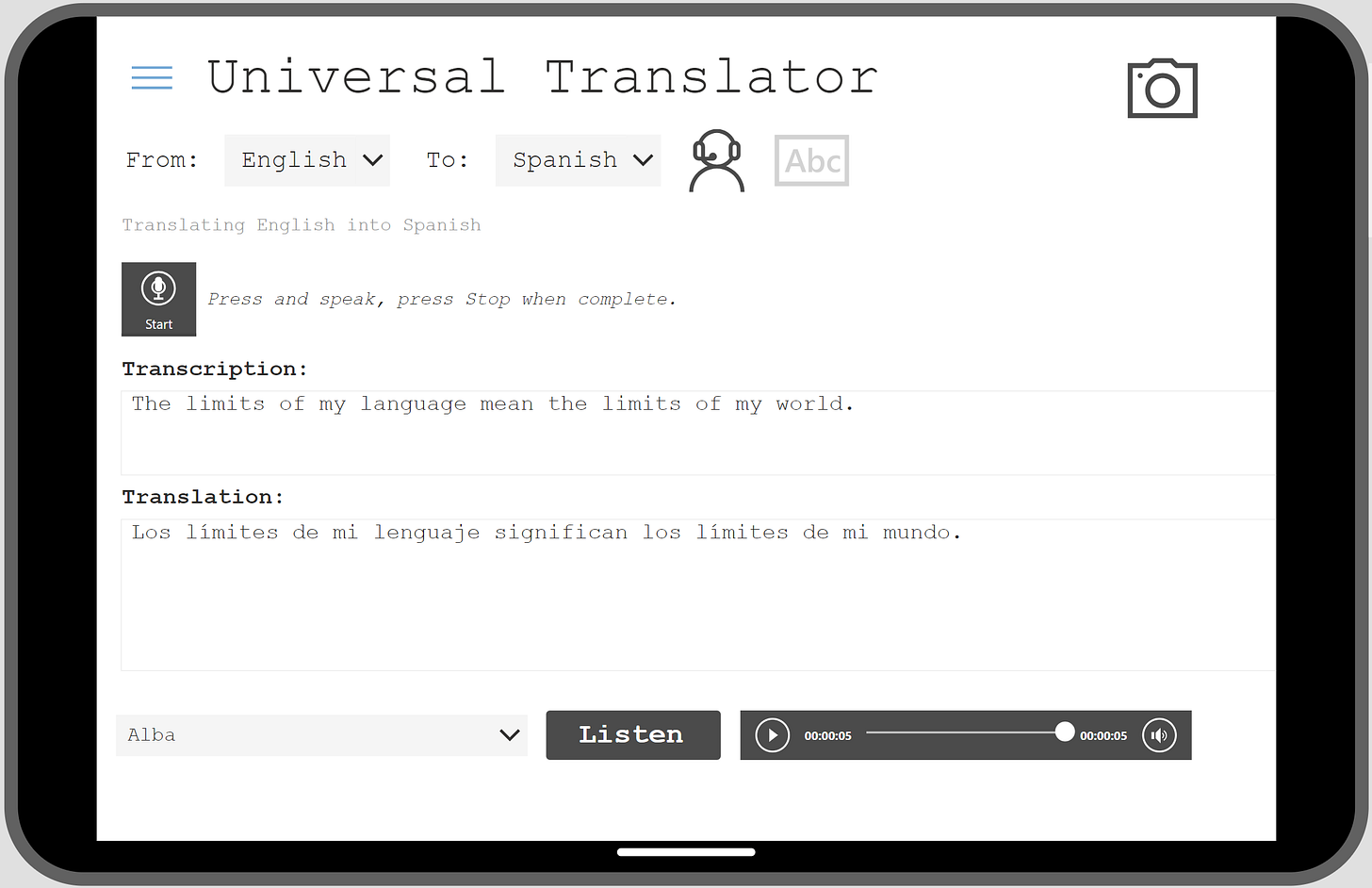

Universal Translator: The App

Now, you can finally ask for directions to the weight room in ANY language using this app.

The features are typical of a translator. For a demo, go to the end of the article.

Capture and transcribe audio.

API: Batch Speech-to-Text

The Microphone control records audio from the user. The control’s OnStop event saves the audio file to storage, which the Batch Speech-to-Text API uses to generate a transcription.

A few ways to do this, but this is where we need to get to Power Fx-wise.

/*1.) Create audio file in storage, return the path.*/

Set(vBlobPath,

AzureBlobStorage.CreateFile(vStoragePath,vAudioFilename, MicrophoneControl.Audio).Path);

/*2.) Create collection and populate with the results from the primary flow - transcription and translation.*/

ClearCollect(vFlowResponseCollection,

TranscribeAndTranslateAudio.Run(Parameter1,Parameter2,…));

/*Set variables with flow output.*/

Set(vTranscription, First(vFlowResponseCollection).transcription);

Set(vTranslation, First(vFlowResponseCollection).translation);

Both a translation and transcription will be displayed.

Depending on the size of the audio file, it may take a moment for a result.

Translate transcriptions.

API: Translator

This happens is in the same flow as the transcription above and is an additional HTTP action that sends the transcribed text to the Translator API for translating into the desired language.

To is the target language. This value is determined by a dropdown in the app, hence the Ask in PowerApps. Important to note that the IETF language tag standard seems to work. Using only en will not work unless you specify the locale, such as en-US or en-GB. Read more about it here: IETF language tag - Wikipedia.

The app in action, so far with sound:

(Vid sped up, translation takes a sec with cool storage)

Now we can create audio of the translated text.

Create audio translation.

API: Text-to-Speech

Once a translation is returned, use the HTTP action to call the Text-to-Speech API to generate an audio file reading out the translation.

Use the AI voice list to provide the user with voices to read their translation aloud.

The Listen button runs a flow that uses the translation output - text - to create the audio file and provides the Audio control with a web URL for it to play.

/*Set variable for file path to audio translation. The Audio control relies on this.*/

Set(vAudioFilepath,

GetAudioTranslation.Run(First(vFlowResponseCollection).translation,

Substitute(vAudioFilename,".mp3","_Translation.mp3"),vVoiceCode).vaudiofilepath)

Example with sound:

Clean translations coming through.

Onto testing.

Save activity.

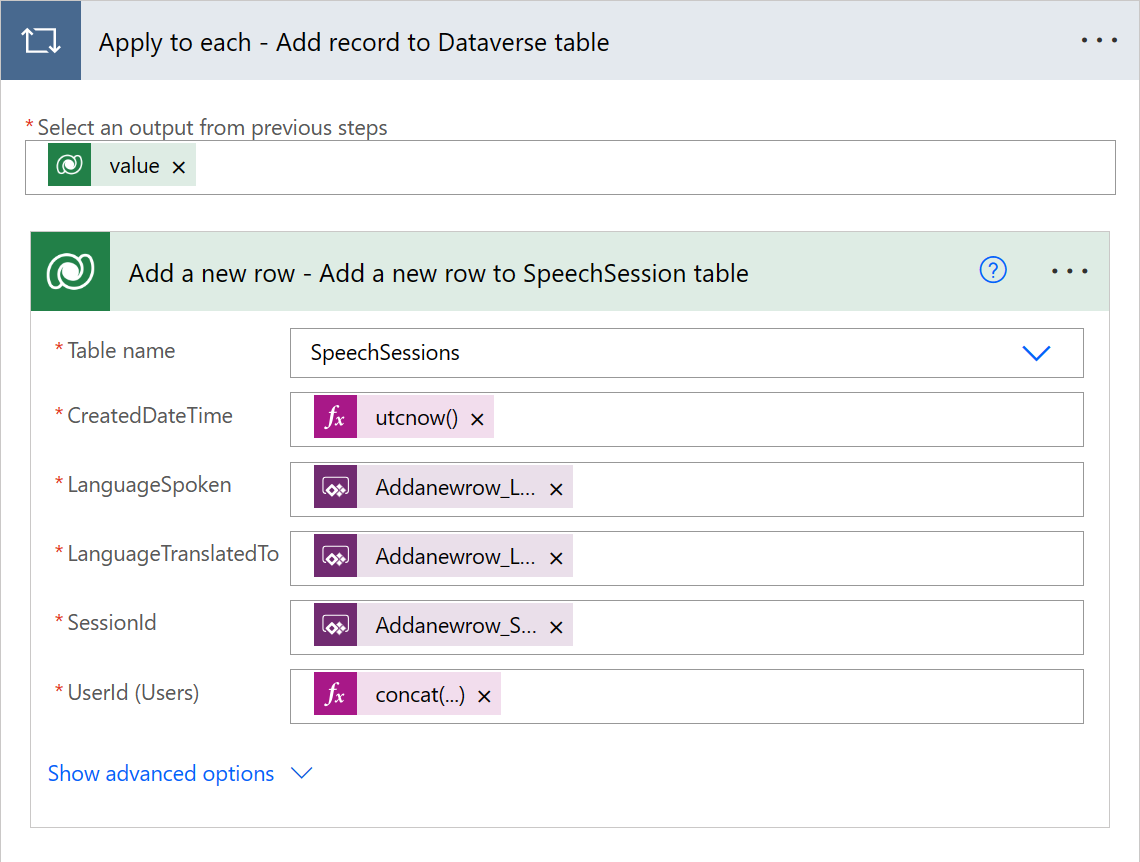

To save the activity, add another flow or path the records into Dataverse.

SaveActivityToDataverse.Run(vUserId_GUID,vLanguageFrom,vLanguageTo,vSessionId)

A patch also works here, but in case you go the flow route, special attention needs to be paid to the UserId column in the SpeechSession table.

An OData expression

systemuserid eq triggerBody()['Listrows_Filterrows']

triggerBody()['Listrows_Filterrows'] can also be provided by the app via Ask in PowerApps within the Dynamic Content window.

Once we have the UserId, we add a new to the table with an Apply to each action.

The expression for the the UserId field is essential:

concat('/systemusers(', outputs('List_rows_-_Get_User_GUID')?['body/value'][0]?['systemuserid'], ')')

Return a status:

Check in Dataverse:

The same can be done to insert records into the SpeechModel and SpeechUtterance tables. The model property, which represents the model used by the Batch STT API, is returned in in the initial response.

To save model detail, add a field, Model, to the Compose action.

Select the model property from the Dynamic Content menu. Or, use an expression:

outputs('Get_transcriptions')?['body/model/self']

A concurrent action in the Save operation adds a record to SpeechModel containing the model used in sessions.

LanguageTranslatedTo and OData Id should be available in the Dynamic Content menu. Select those properties from the previous Add a new row action.

SpeechUtterance involves another Apply to each action. Alternatively, the entire JSON string can be saved for parsing later on.

For a single record, we may not need to nest our operations in Apply to each actions; however, I would like to add a bulk operation for, say, multiple file upload with file size validation. They will come in handy later on.

Final flows

Get AI Voices

Capture, Transcribe, and Translate Audio

**Add a Delete transcription action to delete the transcription contents. This action is already available in the template flow.

Get audio translation

/**/

Looking good, strong start. Thanks to our translator, everyone can participate in the big event. Kid, you’re a star!

Test the App

The testing steps are not much different than the MS Challenge suggestions:

Run the app, start a new translation.

Press the Microphone control to record a speech.

Use drop-down to select translation language.

Press the button to trigger text-to-speech.

Listen to the translated text.

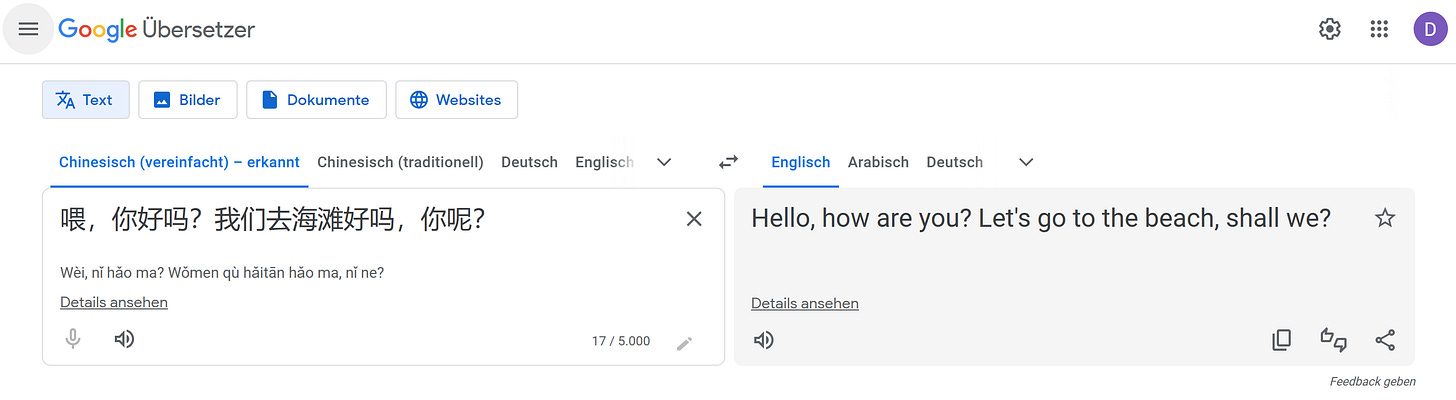

Let’s try it ourselves.

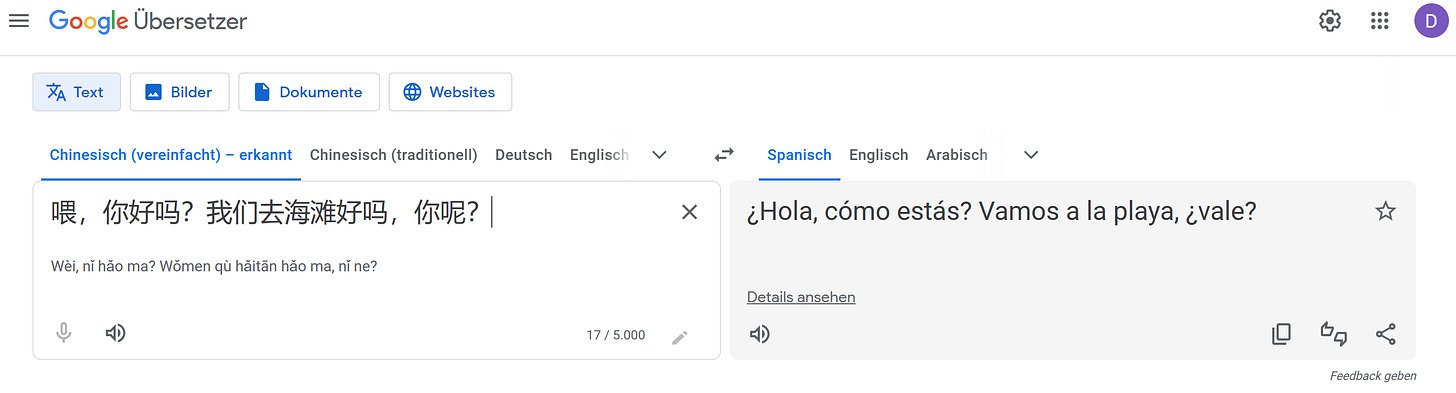

English to Spanish:

German to English:

Now, Spanish to Chinese:

Hopefully, your experience is similar, and your translator can do the same.

The master stroke: validation.

Eggscellent. See you at the party!

/**/

Resources

Challenge Project - Build a Speech Translator App - Training | Microsoft Learn

Build a multi-language app - Power Apps | Microsoft Learn

Transcribe audio to text from Azure blob without writing any code using Power Automate (microsoft.com)

About the Speech SDK - Speech service - Azure AI services | Microsoft Learn

Speech to text REST API for short audio - Speech service - Azure AI services | Microsoft Learn

Speech to text REST API - Speech service - Azure AI services | Microsoft Learn

Batch transcription overview - Speech service - Azure AI services | Microsoft Learn

Text to speech API reference (REST) - Speech service - Azure AI services | Microsoft Learn

Language and country codes (ISO IETF RFC .NET LCID) - Table (venea.net)

ISO Language Codes (639-1 and 693-2) and IETF Language Types - Dataset - DataHub - Frictionless Data