Clockwork

The Wild and Wonderful World of Supported Languages in Azure AI Services

Overview

All it takes is five simple characters to make a critical distinction between a language and the correct regional variants: American and British English, Swiss and Austrian German, Moroccan and Algerian French, to name only a few. Billions of people depend on this differentiation not only in their everyday life; there are even separate codes for areas historically locked in competition for linguistic dominance. That's rich.

While cross-border skirmishes over vocabulary are not too common, certain tools of mass communication - the Internet, for one - use the BCP-47 code set to properly deliver content found in websites, UI/interfaces, and device settings to the innumerable cultures around the world. The codes are a mutant standard comprised of at least two other ISO standards, 639-1 and 3166-1, forming a language-region combination out of two separate codes, or “tags.”

In a Microsoft world, including Azure, this code is referred to as the locale, and it’s integral to providing localized content, i.e., media that’s understandable to a particular audience based on their location and language preferences/settings. Without this, we're a bit up the creek and speaking in tongues over who gets to paddle.

So, serving a global audience requires using the correct language code and its respective regional indicator. There must be a list of these pairings somewhere for us to use. Right?

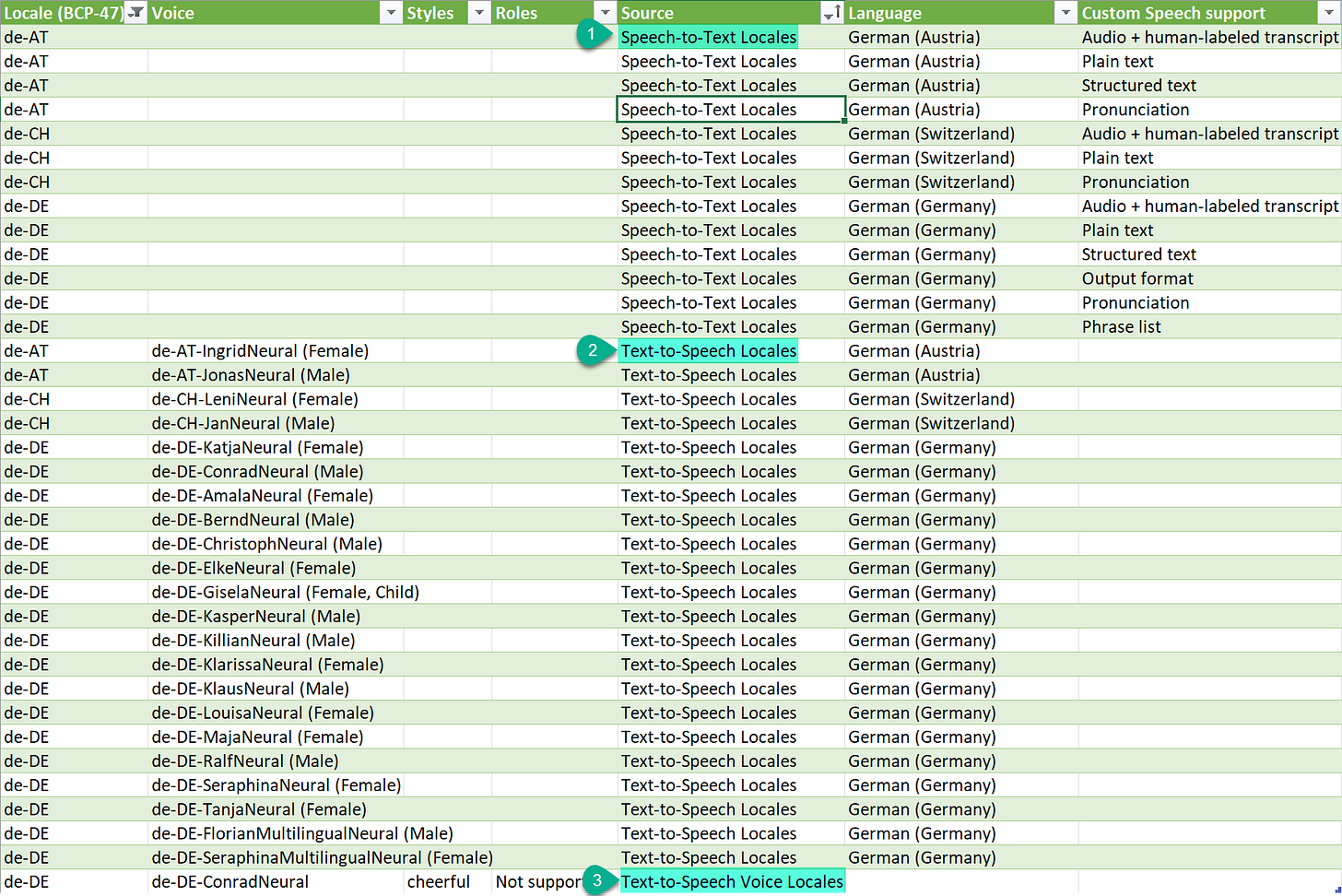

There’s the issue: one list by Azure product/service does not exist. In fact, there are over 10 ten list for Speech and Language services alone, as not all services have the same language capabilities. Further, finding a supported locale in one service and not the other (and vice versa) involves manual comparison of lists from MS Learn.

If you’re using or offering services such as Text-to-Speech (TTS) *and* Speech-to-Text (STT), presenting language options for each feature to a user therefore may require separate data sets. Otherwise:

Locales and voices are also actively introduced, updated, and even retired, so it’s worth reviewing options to automatically manage this information.

A few solutions can get us there. Power Query ingests tables from a reference website for a quick win. REST APIs, part of the Azure AI Speech service offering, also help manage the feral matrix of supported locales between services. Both methods are extendable; an automated/scheduled process identifies any changes and additions against an existing list of language, locales, and voices. The deltas are then reviewed and deployed (or not), and that list is used downstream to pass well-formed BCP-47 code(s) to a service endpoint. It’s alive.

All that data wrangling can be automated, and it moves - you guessed it - like clockwork. Happy Holidays.

/**/

This article presents various standalone ETL solutions using Power Query and Azure to help auto-manage evolving reference data sets.

Caveats

Value. Less manual work means more time developing features, not data wrangling. While these are valid use-cases, they are definitely not the only ones. They are meant to get you thinking about using the tools - not necessarily these tools - to make your working life a little bit easier.

References. I highly recommend perusing the Resources and References section. This is just the surface of the intersect between language, human interaction, and technology.

Scope. The supported languages and locales are covered only for Azure AI Speech services. The Language service is separate but has similar table-based information on MS Learn.

Region availability. Not all services or capabilities are available in every region. Check here for support by regions.

API versions. We will be using versions 3.2 for the API calls.

STT vs. Translation. Speech-to-Text (STT) converts spoken language into written text (aka transcription), while speech translation involves both transcription and translation into another language in real time. The two services have different sets of supported languages. STT uses REST API for short audio only; the latter uses the SDK.

Prerequisites

Basic “system requirements” depend on the solution chosen.

GitHub repo/Teams/Outlook for receiving notifications (optional).

Excel and Power Query for boot-strapped extracts or Power Automate to orchestrate scheduled data extract.

Database or Excel for storing extracted data.

Azure subscription to run Speech services.

Speech service resources, endpoints, and keys.

All set.

Additional Info

SDK solution? Supported languages and locales may not always be available via the SDK, but there are notable exceptions (see link).

Error-handling only partially solves the issue if a locale code is not supported by a service and an error is returned. Why display a locale if it’s not supported in that feature? Kind of a let-down to the user, but that is only my opinion.

Side journey. Important to note that languages without a locale (en, fr, de) will still work straightaway. A default locale will be used if not specified where multiple options exist.

Using Klingon as an example, both

tlh-Latnfor Latin script andtlh-Piqdfor the plqaD script are supported in the Azure Translator service for text translation. tlh-Latn is the default. Naturally, the same goes for human languages with variants - English, Spanish, German, etc.

General Process

This can be accomplished through various methods. See below.

Case studies and solutions

Case study #1: REST API Collection

New multi-lingual voices for the Speech service have just been announced. This brings the total number of TTS voices and personas from 41 to 91 languages and locales.

A language services company specializing in transcription, translation, and captioning hosts a web product to the public. They would like to include the newly available options into their selection options for the popular text-to-speech voice feature. Looks like a manual job, but analysts found that this can be done faster using the /locales available via service REST endpoints.

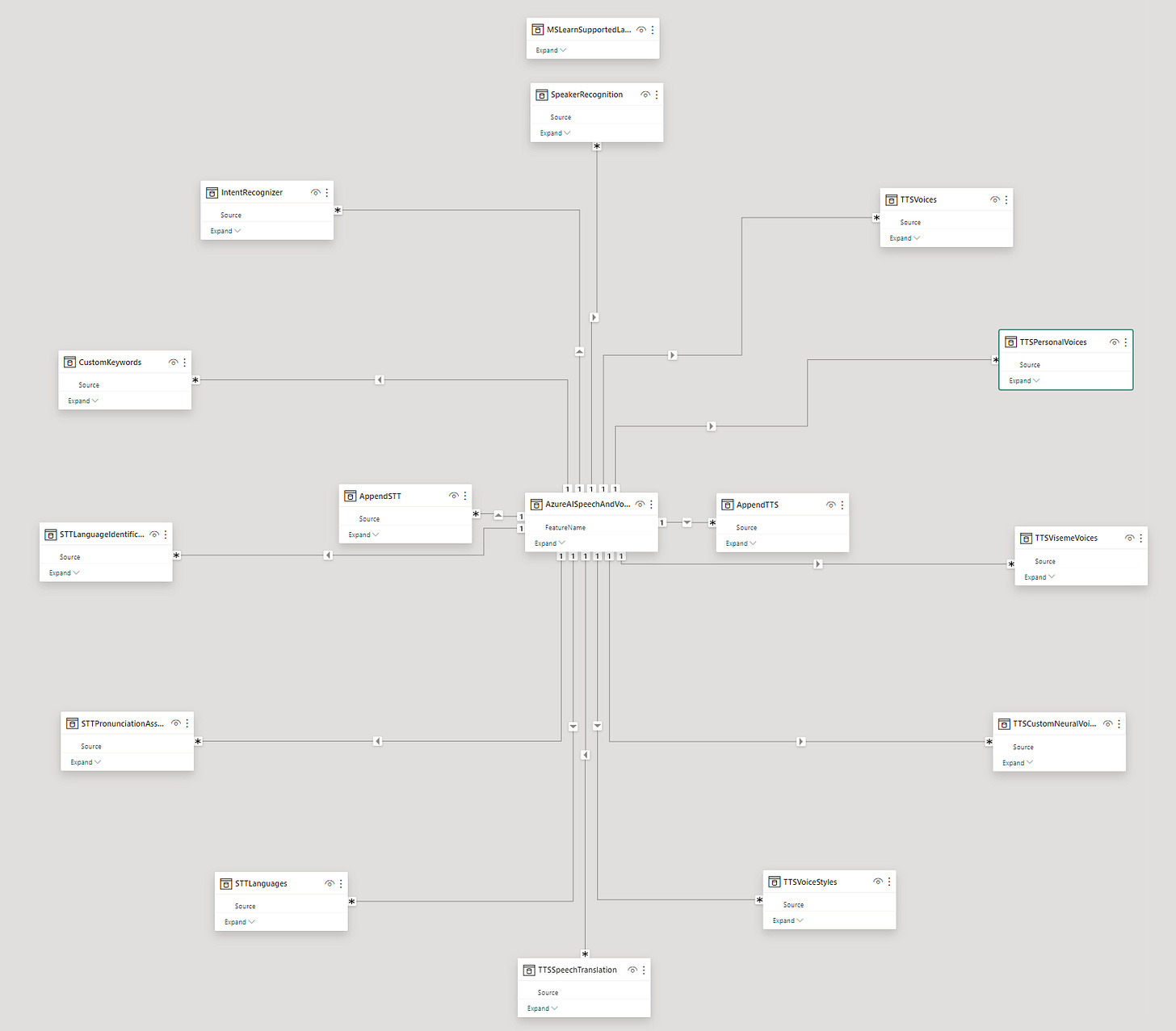

Azure documentation points to endpoints containing supported locale information for a number of areas; however, they do not correspond neatly to the services featured on MS Learn.

Datasets. The various types of data that can be used to train speech recognition models. Acoustic, Pronunciation, Audio, to name a few. Speech-to-Text

Endpoints. The supported locales for user-created service endpoints.

Evaluations. Performance monitor for speech recognition models. Corresponds to the Pronunciation Assessment. SDK only.

Models. Pre-built or custom-trained models used for speech recognition, speech synthesis, translation, etc. Speech-to-Text.

Projects. The workspace where speech service operations are set up and managed.

Transcriptions. Live or recorded audio converted into text. Text-to-Speech.

Translation (not pictured). Real-time translation from one language to another. This uses the separate Translator feature in the Speech service.

Voices (not pictured). The voices available for synthesizing text-to-speech content. Response includes all supported locales, voices, gender, styles, and other details. Corresponds to Text-to-Speech and Voices, Styles and Roles.

Transcriptions (6), translations (7), and a multilingual voice list (8) suffice for now.

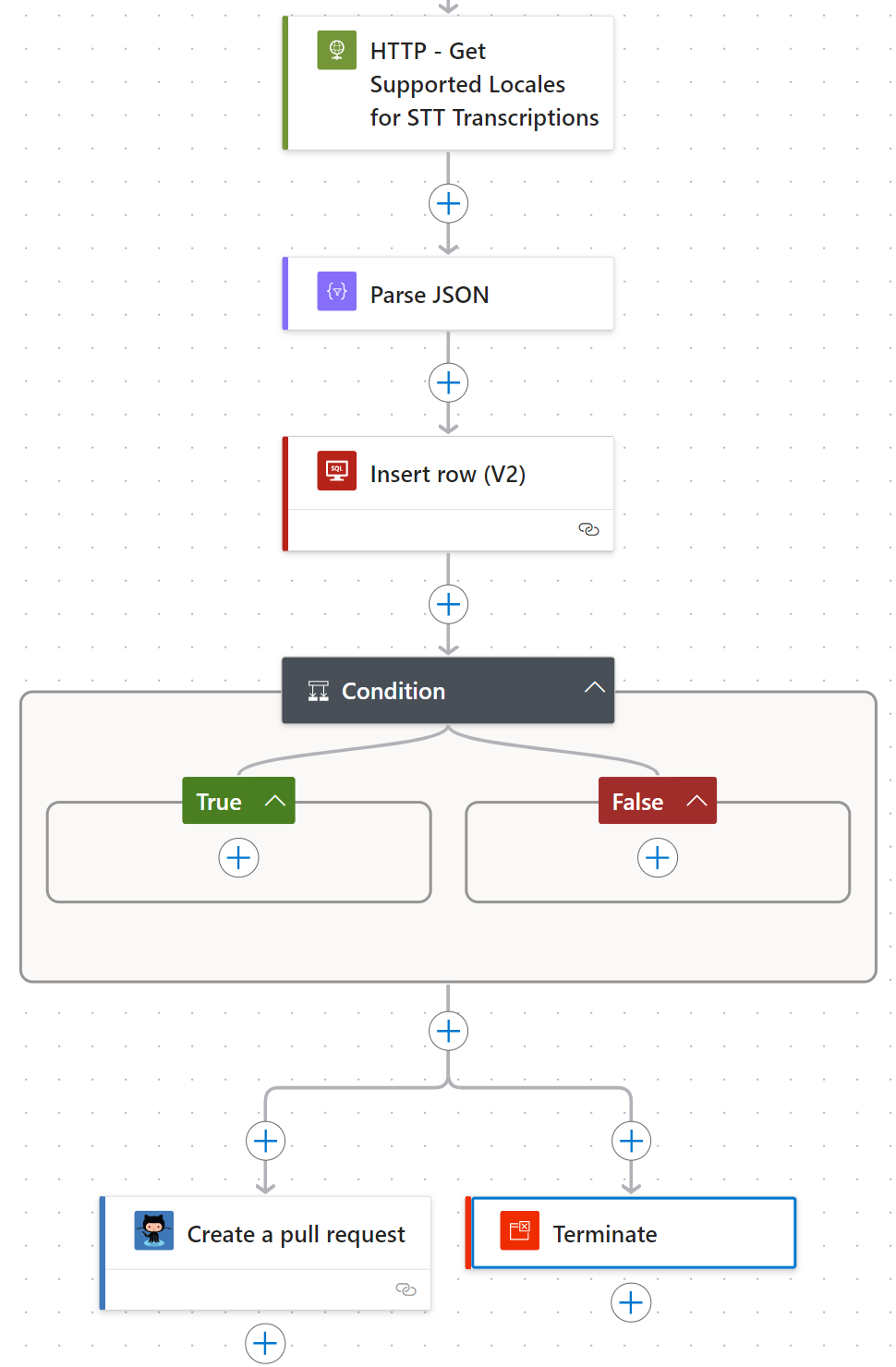

Using Transcriptions as an example, Power Automate easily extracts and parses the result into a data store, and the options can be ultimately presented to the usernor elsewhere.

A scheduled extract is possible for monitoring changes and additions to offerings.

A condition pushes the item(s) to a CI/CD pipeline for review and approval prior to inclusion.

Nice and neat, for the most part.

Case study #2: Power Query + Excel

A small AI startup set to launch their own web product uses Azure Speech and Language services; however, it is hard to keep on top of all supported languages and locales. The SDK does not have a direct way to extract this information either.

MS Learn provides a comprehensive list and the content is regularly updated to include the latest additions and changes in the service. The startup wants to set itself apart, and they need to do it fast, so they use Power Query for “a tactical kludge.”

Engineers solved this quickly: they employed Power Query from within Excel to connect to the MS Learn website on then extract the tables contained supported locales…with a few caveats, of course.

Although this actually works quite well, it may not be a strategic solution, as the MS Learn webpage is subject to change at any given moment. If a starter list is all that is needed, the tables from the supported languages article will suffice.

No better authority than the endpoint itself, I would say, but the separate Language service do not have their own /locales endpoint similar to the Speech service. Out of luck there but, again, MS Learn, in full glory, has all the reference data that the group needs in table format.

That is quick work for Power Query, but there is a bit of repetition of logic; it is recommended to use a dataflow in this instance.

A dataflow works nicely here, however, the query does not fold, which presents additional risk. What to do?

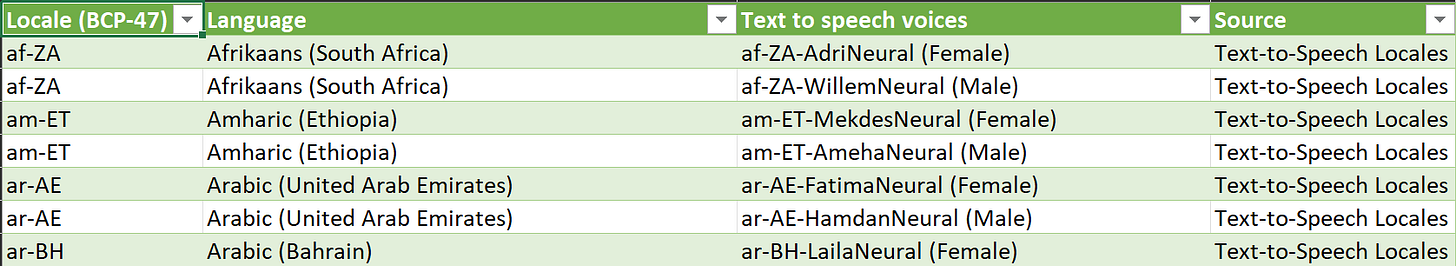

Enter the Old Boy: Microsoft Excel, where we create individual lists in order to form a single master list then combine them via Append query.

Select From Other Source > From Web in the data tab. Use the MS Learn website URL as the source.

https://learn.microsoft.com/en-us/azure/ai-services/speech-service/language-support

Next, transform the results. A little bit of massaging is necessary, but it’s a one-time deal.

Results:

The final Append query to create the master list:

let

Source = Table.Combine({#"Voice Locales", #"STT Locales", #"TTS Locales"})

in

SourceThe master list:

This opens up possibilities for us. We can send these records upstream to a database, compare the results above to an existing list with Merge Queries or left outer join, and even notify interested parties for review/approval using Data Alerts or a CI/CD pipeline trigger.

If Excel is a home base of sorts, however, I recommend looking at Office Scripts, particularly the cross-application scenarios.

Power Automate is also a possibility:

Pretty sure that you cannot refresh an Excel Online file, but a scheduled flow helps us out here.

Finally, we can integrate this master list within a Power App:

GroupBy(

Filter(

LanguageCollection,

Source = vSpeechFeature //Filter condition based on feature dynamically displays the correct list of supported locales.

),

"Column1",

"Column2",

"GroupedCollection"

)Like I said: scrappy.

/**/

E pluribus unum: out of many, one. And that's how we get our master list of supported locales. Enjoy.

/**/

References and Resources

Standards

Microsoft

Understand user profile languages and app manifest languages - Windows apps

Speech to text REST API - Speech service - Azure AI services

See Virtual Network Support for Translator service selected network and private endpoint configuration and support

Community

Language

Additional links inline.